As we know that big data is a collection of huge data sets which are very complex and critical to solve in terms of perfect business solutions. This large volume of database acquired by big mnc organizations over a long period of time is actually very complex to handle and not easy to process by conventional data processing methods. There are better options or techniques available to handle & then extract useful information from organization’s large set of consumer data which can help to take proper business decisions. These techniques are from the field of big data technology. There are many small and large scale business corporations taking advantage of big data to obtain the best possible insights for their business.

What is Big Data Technology?

Big Data is a software tool which basically analyze, processing and then interpret the massive amount of structured and unstructured data that can be useful for conclusions and for present and future forecasts to avoid expected business risks. Two main basic components of big data are operational and analytical approach. Operational approach of big data deals with daily transactions on banking & online shopping site, user activities on social media platforms and so on but analytical approach deals with weather forecast solutions, stock market analysis, scientific calculations etc.

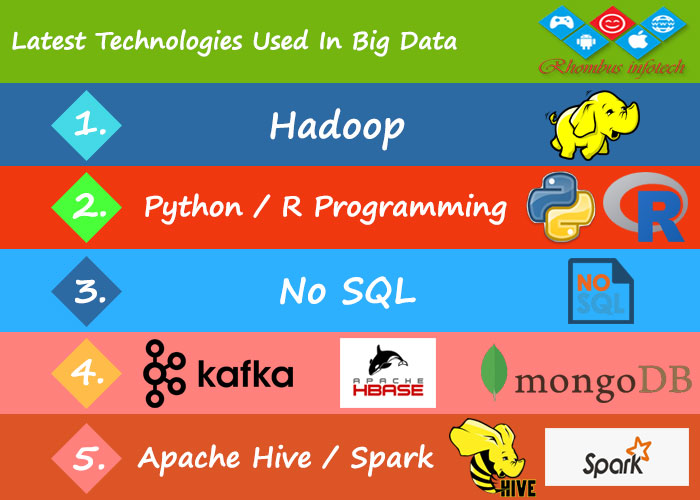

“In this article, we are going to discuss about latest working technologies in trend under Big Data domain that are used by data analytics experts in big organizations”

—- Top Big Data Technologies On The Edge —-

According to the top data industry experts, global spending on big data spending technologies will reach $300 billion by 2022. Industries like manufacturing, banking, education, telecom, entertainment & media and even the government sectors are going towards to adapt big data technology.

- Hadoop:

Hadoop is an open source software framework which stores and manages large volume of data sets, providing enormous data processing capability along with to handle unlimited concurrent tasks. Distributed computing models of hadoop processes huge data at fast pace which reflects the more logical computing nodes we use, the more processing power in our hands. It provides the option to expand your system to handle data sets by adding nodes which require little administration effort to complete it.

- Programming Languages For Big Data:

We cannot even imagine the existence of hadoop without showcasing the lineup of big data programming languages used for large scale analytical & operational tasks to carry out. Four most commonly used languages in big data are Python, R, Java and Scala.

- Python is easy to understand & most preferable programming language used by big data experts to perform data analysis tasks. It is a general purpose programming language which enables programmers to write short lines of codes & makes it more readable. It is widely used by developers to carry out logical & scientific tasks in various industry domains consists large number of well tested libraries which makes it perfect combination of data analysis. As python is a high level language, enables to write prototyping ideas makes coding fast maintaining code transparency and its execution. Multi user development environment become easy with its code transparency feature. Its object oriented feature makes it possible to support advance data structures & scientific computing operations.

- R is also an open source programming language used for data visualization and statistical analysis. R language is much steeper than python and mostly used by data mine experts and data scientists around the globe for deep analysis of data. R consists a large number of data packages, graph functions and lot more which proves it is a most proficient language for large scale deep analytics and has effective data handling capabilities. Some Tech industry tycoons like Microsoft and Google use R for large data analysis. Exploratory data analysis term actually exists in data analysis process using R that is perfect approach which includes variety of techniques such as extraction of important data variables, maximising the options of getting insights from datasets, test underlying possible assumptions.

- It is important to mention here that hadoop and many other relevant products are entirely written in java which makes this programming language great for businesses constantly working in big data domain. Python and R have long been main stream languages to have a hold on data science world but they are not only the languages using for data science. Java is indeed a great language also for doing data science tasks. There are plenty of reasons to use java in data science projects as java is one of the oldest languages used for business development and it is most likely that organization might have a major part of their infrastructure base in java. That means you might want to prototype in R or Python and then rewrite your models code in java. As mentioned earlier, most of the big data frameworks are written in java which makes it easy to find java developer who is comfortable to work with hadoop and hive rather than that person who is not familiar with java technology. It also has a vast and rich variety of libraries & tools to work in machine learning and data science projects. Java’s strongly typed feature makes it possible to help out a lot when working with large data sets & applications. It is easy to maintain basic foundation of java code explicitly defining the types of data & variables resulting in better unit testing outcomes for big data applications. Java is excellent option when we talk about the scaling of applications makes it great choice for building more complex data applications. Last but not least, java is much faster as compare to other languages used for data applications. MNCs like Twitter, Facebook and LinkedIn rely on Java for data engineering efforts.

- Use of NoSQL Database:

It is well known fact that our technological era is generating a massive amount of unstructured data daily. Most of us generally work with relational and structured data sets which can be stored and easily manipulate in backend databases. This data is collected in the form of array of user information, geographical data, social media feeds and in many other forms. These massive unstructured data sets are commonly known as big data which has now become the backbone of data analysis mission critical business decisions. There are two major types of storing such kind huge data, either in a relational database or in a mapping way. SQL is best fit for the first one whereas NoSQL is best option for second one. Large mnc organizations are mostly considering the use of NoSQL as a part of feasible alternative to unstructured databases. NoSQL database can store array of data sets of any type with user flexibility to change the data. There is no need to define data types as it is document based database. NoSQL databases are cost effective as it follows cloud based storage solutions to save the cost. NoSQL oriented Cassandra nakes it possible to setup multiple data centres without much hassle and cost. NoSQL fits well in the framework because when we talk about relational database it is not an ideal deal working in agile integrated environment that needs frequent changes and feedbacks.

- Use of MangoDB:

It is an agile and NoSQL based, open-source document database that is cross-platform compatible. MongoDB is famous because of its storage capacity and stores the document data in the binary form of JSON document. MongoDB is mostly in use for its high scalability, obtainability, and presentation. This document database has some remarkable inbuilt structures which make the database apt for businesses to make instantaneous decisions, create custom-made data-driven connections with its users.

- Use of Hive:

Hive is a data warehouse tool, built on the Hadoop platform that provides a similar interface as SQL- to store data in HDP. The query language that is exclusive for Hive is HiveQL. This language interprets SQL-like queries into MapReduce jobs then deploy it to Hadoop platform. HiveQL as well supports MapReduce scripts which could be the plugin for queries. Hive augments the schema design elasticity and contributes for data serialization and deserialization.

- Use of Spark:

Apache Spark is one of the major open source projects for data processing tasks. It has similarities with MapReduce, however, it outpaces MapReduce with features like speed, easy user interaction, and ingenuity of analytics. Apache Spark reduces the development time that Hadoop usually takes. This leads to smooth streaming as well as collaborative analysis of data. Spark has cohesive units for streaming, graph processing, and SQL sustenance. This is the reason for its entitlement as one of the fastest big data processing tools. It supports all key big data languages like Python, R, Java, and Scala.

- Use of HBase:

Apache HBase is an open source NoSQL database, offering real-time read or write manipulation provision to large volume of datasets and it is a Hadoop application. It scales itself linearly to manage large data sets with numerous rows and columns, and smoothly syndicates data sources with distinctive structures and schemas. HBase is one of the Apache Hadoop add-ons. It contains tools like Hive, Pig, and ZooKeeper. Businesses employ Apache HBase’s low expectancy storage for situations that demand real-time analysis and horizontal data for consumer applications.

- Use of Kafka:

Kafka is another open source, scalable, highly swift and secure platform and acts like a bridge between several key open source systems like Spark, NiFi, and the third-party tools. Kafka has similar features as that of a messaging system with some additional and distinctive features. It is distributed streaming platform for handling real-time data feeds. Since Kafka is a distributed system, subjects are segregated and simulated across numerous nodes. Messages are merely byte collections and developers can use it to stock any object in any format. Usually, String, JSON, and Avro are the most common ones. Written in Java and Scala, Kafka is a fast, scalable, durable, and fault-tolerant publish-subscribe messaging system that works in combination with Apache Storm, Apache HBase and Apache Spark for real-time analysis and rendering of streaming data.

Conclusion:

Hopefully you enjoy reading this article! Big Data Analytics is a one of the best enhancing tool of future. With big data analytics more educated decisions can be taken and focus can remain on business operations moving forward. Big Data technologies are changing all that logical and statistical analysis makes it possible to weed out bad info in a highly efficient manner.